From Principles to Playbook: Build an AI-Governance Framework in 30 Days

Your 4-Week Sprint to Audit, Risk-Tier, and Operationalize Responsible AI

The gap between aspirational AI principles and operational reality is where risks fester – ethical breaches, regulatory fines, brand damage, and failed deployments. Waiting for perfect legislation or the ultimate governance tool isn't a strategy; it's negligence. The time for actionable governance is now.

This isn't about building an impenetrable fortress overnight. It's about establishing a minimum viable governance (MVG) framework – a functional, adaptable system – within 30 days. This article is your tactical playbook to bridge the principles-to-practice chasm, mitigate immediate risks, and lay the foundation for robust, scalable AI governance.

Why 30 Days? The Urgency Imperative

Accelerating Adoption: AI use is exploding organically across departments. Without guardrails, shadow AI proliferates.

Regulatory Tsunami: From the EU AI Act and US Executive Orders to sector-specific guidance, compliance deadlines loom.

Mounting Risks: Real-world incidents (biased hiring tools, hallucinating chatbots causing legal liability, insecure models leaking data) demonstrate the tangible costs of inaction.

Competitive Advantage: Demonstrating trustworthy AI is becoming a market differentiator for customers, partners, and talent.

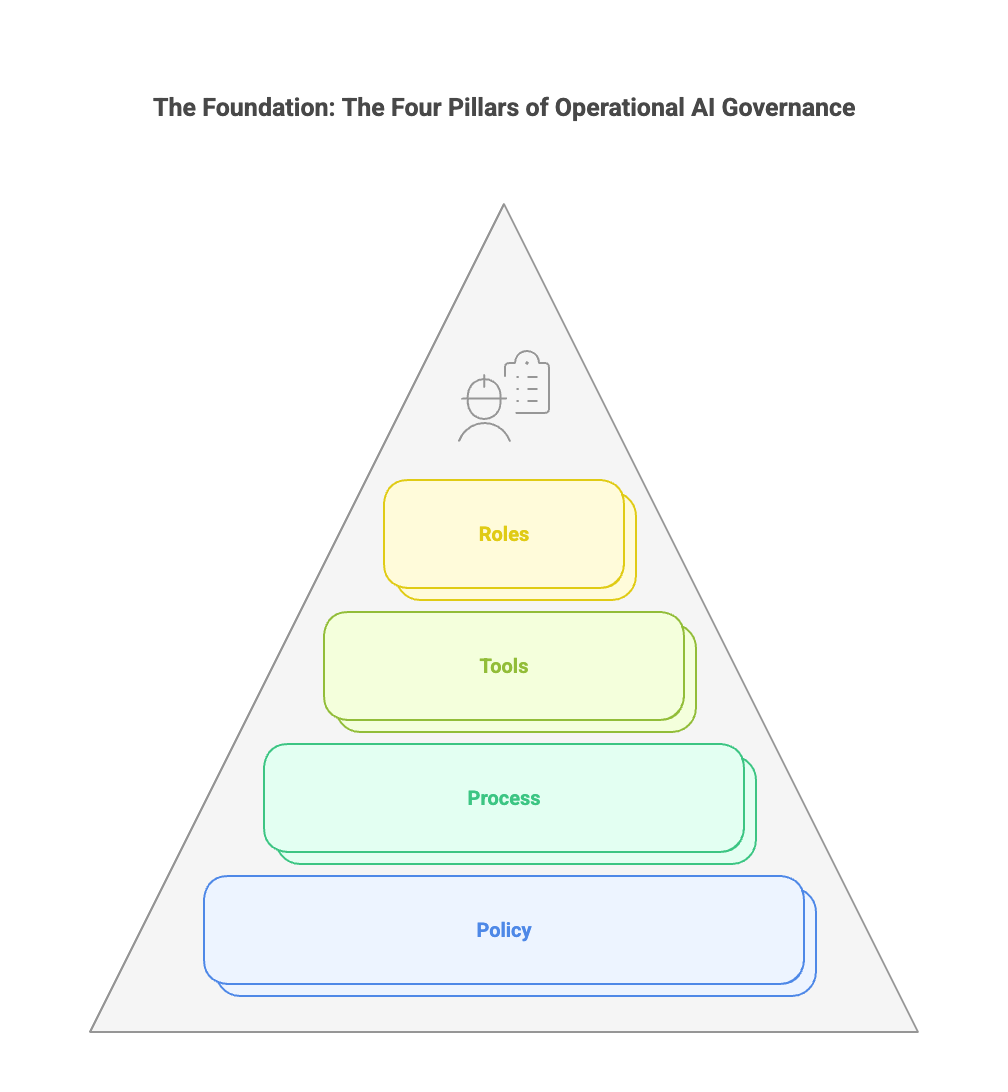

The Foundation: The Four Pillars of Operational AI Governance

An effective MVG framework isn't a single document; it's an integrated system resting on four critical pillars. Neglect any one, and the structure collapses.

Policy Pillar: The "What" and "Why" - Setting the Rules of the Road

Purpose: Defines the organization's binding commitments, standards, and expectations for responsible AI development, deployment, and use.

Core Components:

Risk Classification Schema: A clear system for categorizing AI applications based on potential impact (e.g., High-Risk: Hiring, Credit Scoring, Critical Infrastructure; Medium-Risk: Internal Process Automation; Low-Risk: Basic Chatbots). This dictates the level of governance scrutiny. (e.g., Align with NIST AI RMF or EU AI Act categories).

Core Mandatory Requirements: Specific, non-negotiable obligations applicable to all AI projects. Examples:

Human Oversight: Define acceptable levels of human-in-the-loop, on-the-loop, or review for different risk classes.

Fairness & Bias Mitigation: Requirements for impact assessments, testing metrics (e.g., demographic parity difference, equal opportunity difference), and mitigation steps.

Transparency & Explainability: Minimum standards for model documentation (e.g., datasheets, model cards), user notifications, and explainability techniques required based on risk.

Robustness, Safety & Security: Requirements for adversarial testing, accuracy thresholds, drift monitoring, and secure development/deployment practices (e.g., OWASP AI Security & Privacy Guide).

Privacy: Compliance with relevant data protection laws (GDPR, CCPA, etc.), data minimization, and purpose limitation for training data.

Accountability & Traceability: Mandate for audit trails tracking model development, data lineage, decisions, and changes.

30-Day Goal: Draft and gain leadership sign-off on a concise, actionable Enterprise AI Policy & Standards Document (5-10 pages max), incorporating your principles and defining the risk classification and core mandatory requirements. Avoid lengthy philosophical debates; focus on actionable minimum standards.

Process Pillar: The "How" - Embedding Governance into Workflow

Purpose: Defines the concrete steps, workflows, and checkpoints that integrate governance into the AI lifecycle (from ideation to decommissioning).

Core Components:

AI Project Intake & Risk Triage: A standardized form/channel for reporting new AI projects. Initial assessment based on the Risk Classification Schema.

Mandatory Impact Assessments: A templated process (e.g., Algorithmic Impact Assessment - AIA) required before development begins for Medium/High-Risk projects. Covers intended use, data sources, potential biases, risks, mitigation plans, and compliance checks.

Stage-Gated Reviews: Defined checkpoints (e.g., Concept Approval, Pre-Development Impact Assessment Sign-off, Pre-Deployment Review, Post-Deployment Monitoring Review) with clear entry/exit criteria and required documentation.

Documentation Standards: Templates for Model Cards, Datasheets, and AIA reports ensuring consistency and essential information capture.

Incident Response Protocol: Clear steps for identifying, reporting, investigating, mitigating, and communicating AI-related failures or harms.

Deployment & Change Management: Process for approving deployment of new models or significant updates, including rollback plans.

30-Day Goal: Define and document the core AI Governance Workflow with key process maps and templates (Intake Form, AIA Template, Model Card Template). Pilot this workflow on 1-2 active projects.

Tools Pillar: The "Enablers" - Scaling Governance Efficiently

Purpose: Leverages technology to automate, scale, and enforce governance processes, making them sustainable beyond manual effort.

Core Components (Initial Focus):

Centralized Inventory/Registry: A single source of truth (could start as a simple, secure database/spreadsheet, evolve to dedicated tools like Truera, Robust Intelligence, Verta, Collibra) tracking all AI projects/models, their risk classification, owners, status, documentation links, and monitoring status.

Bias & Fairness Testing Tools: Open-source (AIF360, Fairlearn) or commercial tools integrated into development pipelines for automated testing.

Explainability (XAI) Tools: Libraries (SHAP, LIME) or platforms to generate explanations for model outputs, especially for high-risk applications.

Model Performance & Drift Monitoring: Basic dashboards (using existing BI tools, Prometheus/Grafana) or specialized ML monitoring tools (Aporia, Arthur, Fiddler) to track accuracy, data drift, concept drift, and performance degradation in production.

Documentation & Workflow Management: Using existing platforms (Confluence, SharePoint, Jira, ServiceNow) or specialized GRC platforms adapted for AI to manage templates, workflows, approvals, and audit trails.

30-Day Goal: Establish the AI Model Inventory/Registry and identify/pilot at least one core tool (e.g., bias testing library or basic drift monitoring dashboard) integrated into an active project. Map existing tools that can be leveraged.

Roles Pillar: The "Who" - Defining Clear Ownership & Accountability

Purpose: Ensures clear ownership, responsibility, and expertise for executing and overseeing the governance framework. Avoids the "everyone's problem is no one's problem" trap.

Core Roles (Adapt to Org Size):

AI Project Owner: Business or technical lead responsible for the specific AI application's development, deployment, performance, and compliance with governance processes. Accountable for completing AIAs, documentation, and adhering to policy.

Model Developer/Data Scientist: Responsible for implementing technical requirements (bias testing, explainability, security, documentation) during development.

AI Governance Lead/Office (Often Part-Time Initially): Responsible for operating the governance framework – managing intake, maintaining inventory, coordinating reviews, tracking compliance, reporting. The central hub.

Cross-Functional Review Board (e.g., AI Ethics/Governance Board): Provides oversight, challenge, and approval at key stage gates (especially for High-Risk AI). Includes Legal, Compliance, Risk, Security, Privacy, Ethics, and relevant Business Leaders. Not involved in day-to-day, but critical for high-stakes decisions.

Risk & Compliance: Ensures alignment with overall enterprise risk management and regulatory obligations.

Security & Privacy: Provides specific expertise and validation for security and privacy controls.

Executive Sponsor: Senior leader (e.g., CIO, CRO, CDO, CAO) championing governance, providing resources, and holding the organization accountable.

30-Day Goal: Clearly define and communicate the core governance roles and responsibilities (RACI matrix is ideal). Appoint the initial AI Governance Lead and establish the Review Board Charter & Membership.

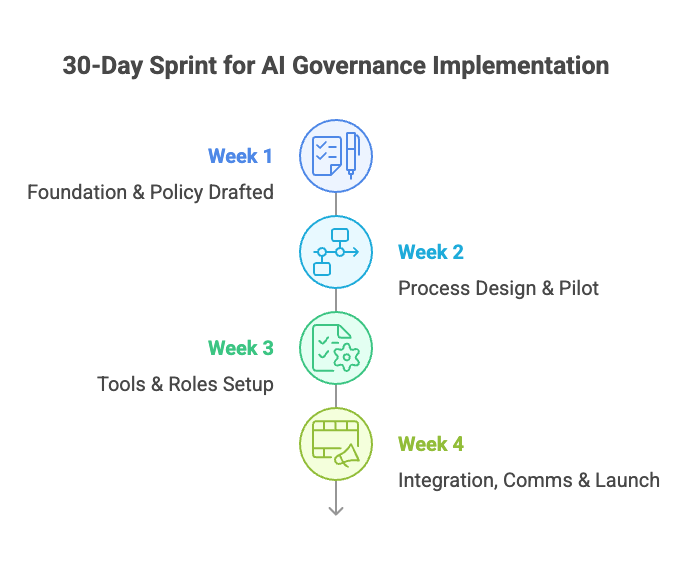

The 30-Day Sprint: Your Week-by-Week Execution Plan

(Assumes a small, dedicated core team - e.g., Governance Lead, Legal/Compliance Rep, Tech Lead, Risk Officer - supported by part-time SMEs)

Week 1: Foundation & Policy (Goal: Draft Policy Signed Off)

Day 1-2: Kickoff & Stakeholder Mapping. Assemble core team. Identify key stakeholders (Legal, Compliance, Security, Privacy, Risk, IT, key business units using AI). Map known AI projects (shadow AI hunt!).

Day 3-4: Gap Analysis & Principles Review. Audit existing relevant policies (IT, Security, Privacy, Ethics, Procurement). Review current AI principles. Identify immediate high-risk AI use cases.

Day 5-6: Draft Risk Classification Schema & Core Requirements. Define simple High/Medium/Low criteria. List 5-7 non-negotiable mandatory requirements based on principles and regulations.

Day 7: Develop Policy Draft. Consolidate schema and requirements into a concise Enterprise AI Policy & Standards draft document.

Deliverable: Draft AI Policy & Standards Document.

Week 2: Process Design & Pilot (Goal: Core Process Defined & Piloted)

Day 8-9: Design Intake & Triage Process. Create AI Project Intake Form. Define initial risk assessment steps.

Day 10-11: Develop Impact Assessment (AIA) Template. Focus on essential questions for risk identification and mitigation planning. Create a Model Card template skeleton.

Day 12: Map Stage-Gated Workflow. Define key review points (Concept, Pre-Dev, Pre-Deploy) and required artifacts for each. Outline incident response steps.

Day 13-14: Select & Pilot Process. Choose 1-2 active (preferably medium-risk) AI projects. Run them through the new intake, AIA, and documentation process. Gather feedback.

Deliverable: Defined Governance Workflow (Map), AIA Template, Model Card Template, Intake Form. Pilot feedback report.

Week 3: Tools & Roles Setup (Goal: Inventory Live, Tools Piloted, Roles Defined)

Day 15-16: Stand Up Inventory/Registry. Populate with known projects from Week 1 and pilot projects. Define mandatory fields (Owner, Risk Class, Status, Doc Links).

Day 17-18: Assess & Select Initial Tool. Evaluate immediate need (e.g., bias testing vs. drift monitoring). Choose one open-source or readily available tool. Integrate it into one pilot project pipeline.

Day 19: Define Core Roles & RACI. Draft clear responsibilities for Project Owner, Developer, Governance Lead, Review Board, Compliance, Security. Create a RACI matrix for key governance tasks.

Day 20-21: Establish Review Board Charter. Define scope, membership, meeting frequency, decision authority (especially for High-Risk). Appoint initial members. Appoint AI Governance Lead.

Deliverable: Operational AI Model Inventory/Registry. Piloted one governance tool. Defined Roles & Responsibilities Document (incl. RACI). Review Board Charter Draft.

Week 4: Integration, Comms & Launch (Goal: Framework Operational, Org Aware)

Day 22: Refine Policy & Processes. Incorporate feedback from Week 2 pilot and Week 3 activities. Finalize Policy, Workflow, Templates.

Day 23: Develop Training & Comms Materials. Create a 1-pager overview, quick reference guide for Project Owners/Developers, and a short presentation.

Day 24: Executive Briefing & Formal Sign-Off. Present the finalized MVG framework, 30-day outcomes, and next steps to Executive Sponsor and senior leadership. Secure formal approval.

Day 25: Enterprise Communication. Launch the framework internally via email, intranet, town hall announcement. Distribute comms materials.

Day 26: Initial Training. Conduct first training session for key stakeholders, project owners, and developers.

Day 27-30: Formalize & Monitor. Finalize Review Board membership and schedule first meeting. Ensure Inventory is updated. Monitor intake of new projects. Establish a simple feedback loop for framework improvements.

Deliverable: Final Signed Policy & Standards. Finalized Process Docs & Templates. Live Inventory. Active Governance Lead & Review Board. Trained initial cohort. Official Framework Launch Communication.

Your First-Draft AI Governance Checklist for Model Launch (Pre-Deployment Gate)

Use this checklist before deploying any new AI model or significant update. Tailor rigor based on Risk Classification (High-Risk requires exhaustive checks).

I. Purpose & Context:

* [ ] Clear statement of model's intended purpose and use case documented.

* [ ] Alignment with approved business justification and ethical review (if applicable).

* [ ] Defined scope and limitations documented (what it shouldn't be used for).

II. Data & Development:

* [ ] Data Provenance: Sources of training/validation data documented & assessed for relevance/quality.

* [ ] Bias Assessment: Rigorous testing for unwanted bias across relevant protected groups (using defined metrics) completed. Results documented in Model Card.

* [ ] Bias Mitigation: Steps taken to mitigate identified biases documented and justified.

* [ ] Data Privacy: Compliance with data minimization, purpose limitation, and relevant privacy regulations (GDPR, CCPA, etc.) verified. PII handling documented.

* [ ] Security: Model development followed secure coding practices. Model artifact security validated.

III. Model Performance & Explainability:

* [ ] Performance Validation: Model meets defined accuracy, precision, recall, or other relevant performance metrics on hold-out validation data. Benchmarks documented.

* [ ] Robustness Testing: Basic testing for adversarial robustness or unexpected input handling conducted (especially for High-Risk).

* [ ] Explainability: Appropriate level of explainability (global/local) implemented and validated based on risk class. Method documented. User-facing explanations tested (if applicable).

IV. Compliance & Risk:

* [ ] Algorithmic Impact Assessment (AIA): Completed, reviewed, and approved by relevant stakeholders (Review Board for High-Risk).

* [ ] Regulatory Check: Specific legal/compliance review completed for applicable regulations (e.g., sector-specific rules, consumer protection laws).

* [ ] Risk Mitigation Plan: Documented plan for identified key risks (e.g., bias, security, failure modes, drift).

V. Operations & Monitoring:

* [ ] Deployment Plan: Clear roll-out strategy, including phased deployment/Canary testing plan if appropriate.

* [ ] Rollback Plan: Defined procedure for quickly reverting the model if critical issues arise.

* [ ] Monitoring Setup: Performance, drift (data/concept), and key fairness metrics monitoring configured and operational. Alert thresholds defined.

* [ ] Human Oversight Plan: Defined level and mechanism for human oversight/review documented and resourced.

VI. Documentation & Transparency:

* [ ] Model Card: Completed and submitted to Inventory/Registry. Includes key info: purpose, version, owners, training data summary, performance metrics, fairness assessment, limitations, usage recommendations.

* [ ] User Notification: Plan for informing end-users they are interacting with AI implemented (where required/appropriate).

* [ ] Audit Trail: Development and deployment steps logged in the inventory/registry.

VII. Approvals:

* [ ] Technical Validation: Sign-off from Model Developer/ML Ops lead.

* [ ] Business Owner Sign-off: Confirming model meets requirements and risks are accepted.

* [ ] Governance Lead Sign-off: Confirming adherence to governance process and documentation.

* [ ] Review Board Approval: Mandatory for High-Risk AI. Formal approval documented.

* [ ] Security & Privacy Sign-off: Confirming controls are adequate.

Beyond Day 30: Iterate, Scale, and Embed

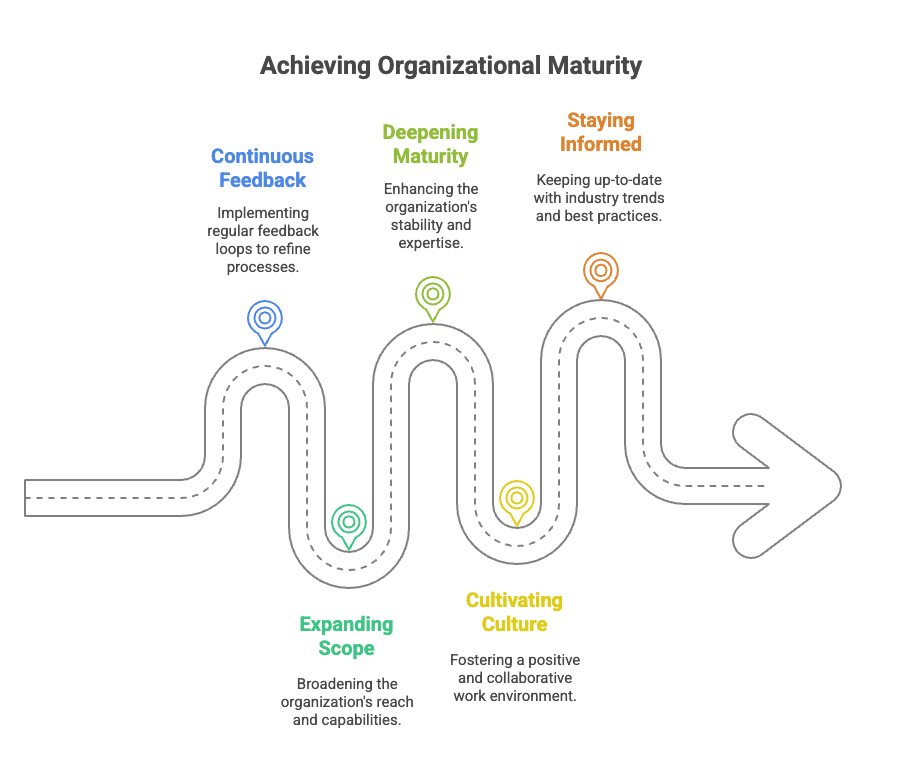

Your 30-day MVG framework is a vital starting point, not the finish line. The next critical phase involves:

Continuous Feedback & Refinement: Actively solicit feedback from project teams and reviewers. Adapt processes, templates, and tools based on real-world use. Review and update the policy quarterly.

Expanding Scope: Gradually apply the framework to lower-risk AI and existing production models. Incorporate generative AI use cases specifically.

Deepening Maturity: Enhance tooling (e.g., more sophisticated monitoring, automated compliance checks). Develop more granular standards for specific risk categories or AI types (e.g., LLMs). Build specialized training.

Cultivating Culture: Integrate AI governance training into onboarding. Recognize teams demonstrating excellent governance. Foster open discussion of AI risks and failures.

Staying Informed: Continuously monitor the evolving regulatory landscape, standards bodies (NIST, ISO), and best practices. Adapt your framework proactively.

Conclusion: From Paralysis to Proactive Governance

The complexity of AI governance is not an excuse for inaction. The 30-day sprint outlined here provides a concrete path to move beyond principles and establish a functional, risk-based governance framework. By focusing on the Four Pillars (Policy, Process, Tools, Roles), executing the Week-by-Week Tracker, and rigorously applying the First-Draft Checklist, you transform abstract commitments into operational reality.

This isn't about creating bureaucracy; it's about enabling responsible innovation. A minimum viable governance framework reduces catastrophic risks, builds stakeholder trust, ensures regulatory readiness, and ultimately allows your organization to harness the power of AI with greater confidence and sustainability. Start building your playbook today. The clock is ticking, and the stakes for your enterprise have never been higher.

What's your biggest AI governance challenge? Share in the comments.